The AI Core for Predictive API Infrastructure

Powered by TensorFlow, integrated into AVAP for predictive automation and multi-LLM orchestration.

Predictive, AI-Driven API Infrastructure Management

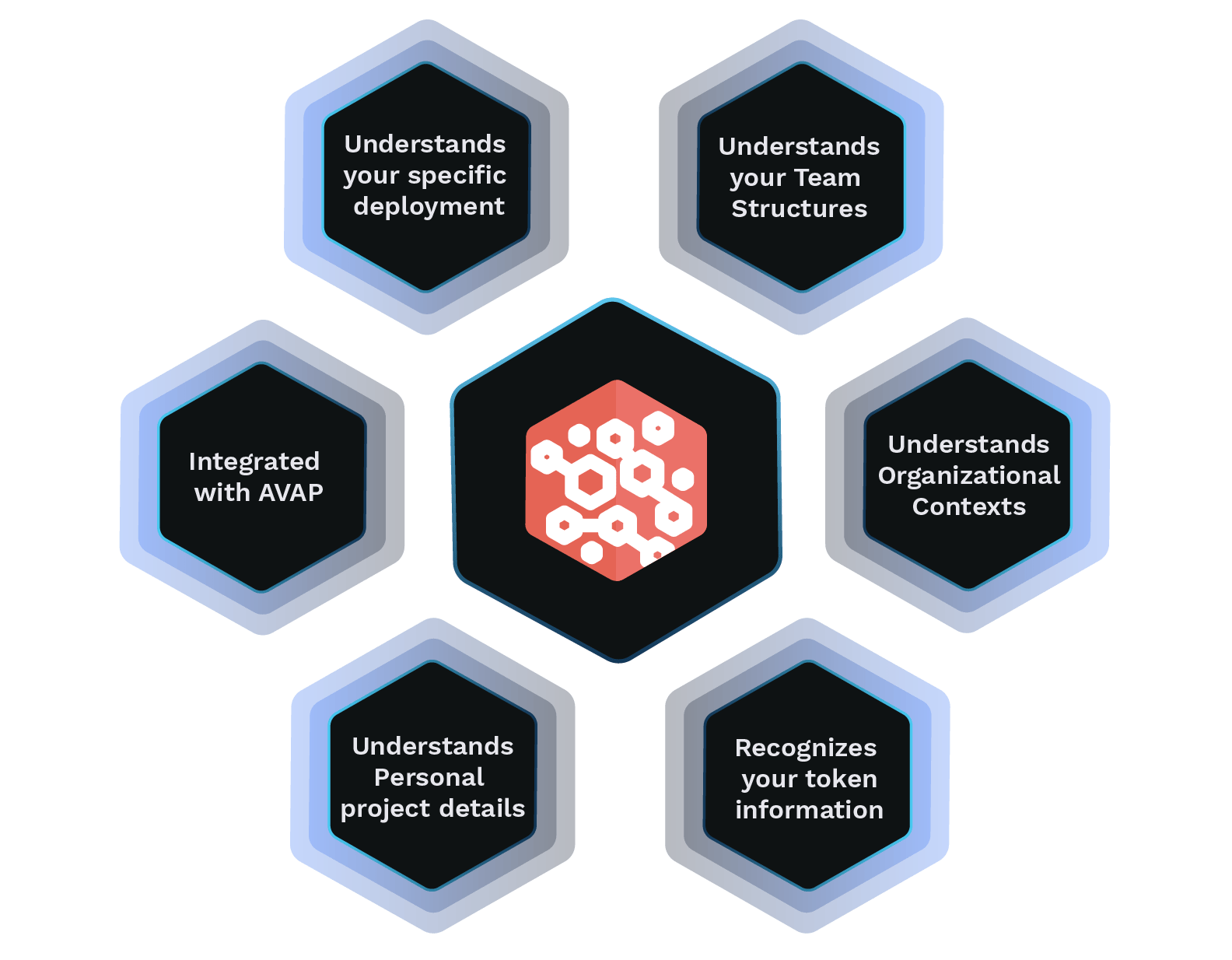

Brunix is a TensorFlow-powered AI engine built for AIOps in API-driven environments. It delivers predictive management, intelligent routing, and unattended automation across the entire API lifecycle, from design and deployment to scaling and optimization.

With a native multi-LLM orchestration layer (ChatGPT, LLaMA, Gemini…), Brunix can combine operational intelligence with conversational capabilities when needed, all under one interface.

Seamlessly integrated into AVAP, it strengthens security, ensures high availability, and accelerates delivery while maximizing infrastructure performance.

AI-driven development

Multi-LLM Orchestration with a Proprietary AI Core

At its heart, Brunix runs on our own TensorFlow-based AI engine, specialized in predictive API infrastructure management. On top of this core, Brunix can orchestrate multiple LLMs, such as ChatGPT, LLaMA, and Gemini, enabling conversational assistance, advanced data analysis, and custom automation flows without locking you into a single model.

Intelligent Process Optimization

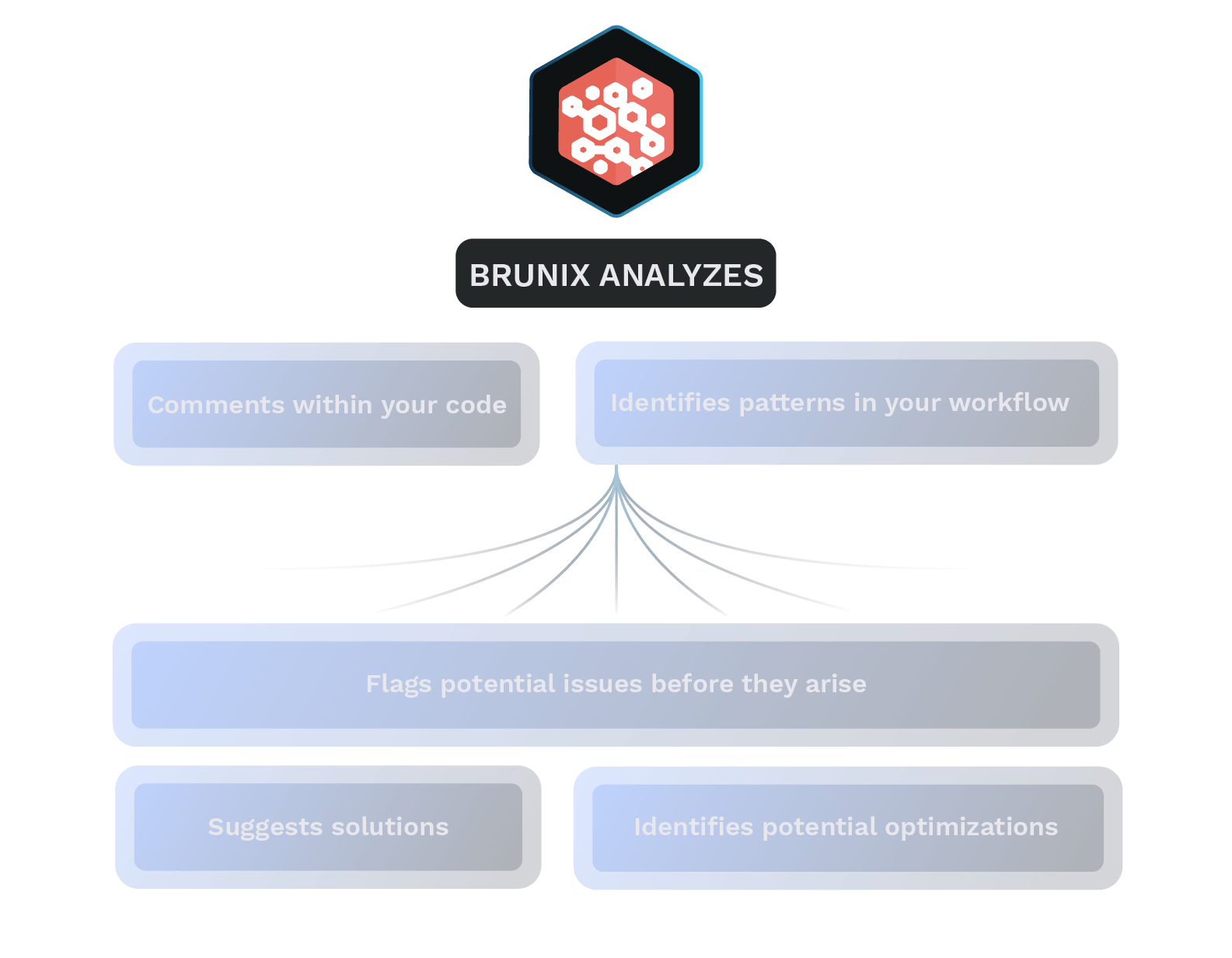

Brunix actively improves your development pipeline. By analyzing coding patterns, deployment data, and infrastructure performance, it automatically suggests efficiency improvements, optimizes API integrations, and streamlines redundant tasks. This proactive approach reduces time-to-market, enhances team productivity, and minimizes operational overhead.

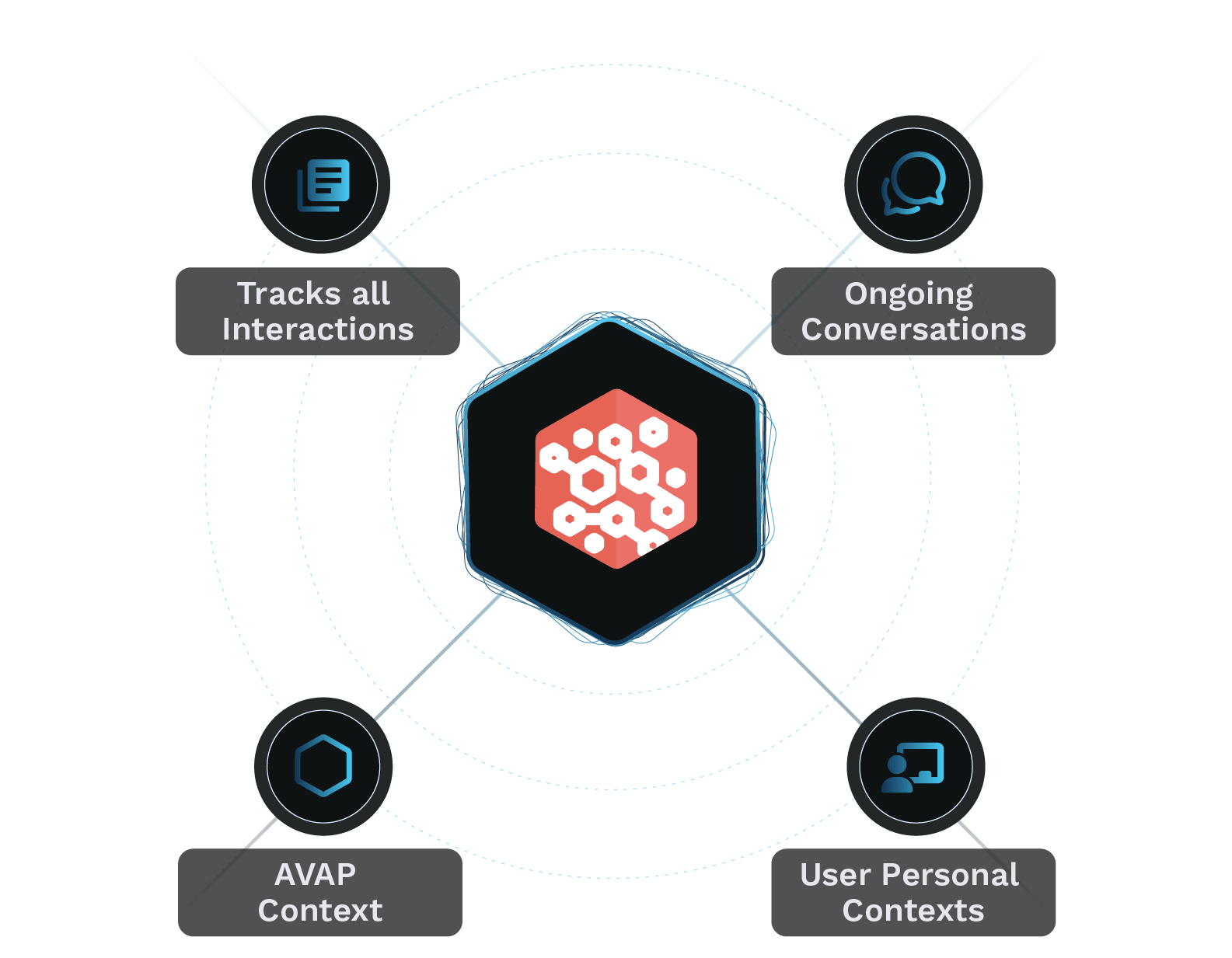

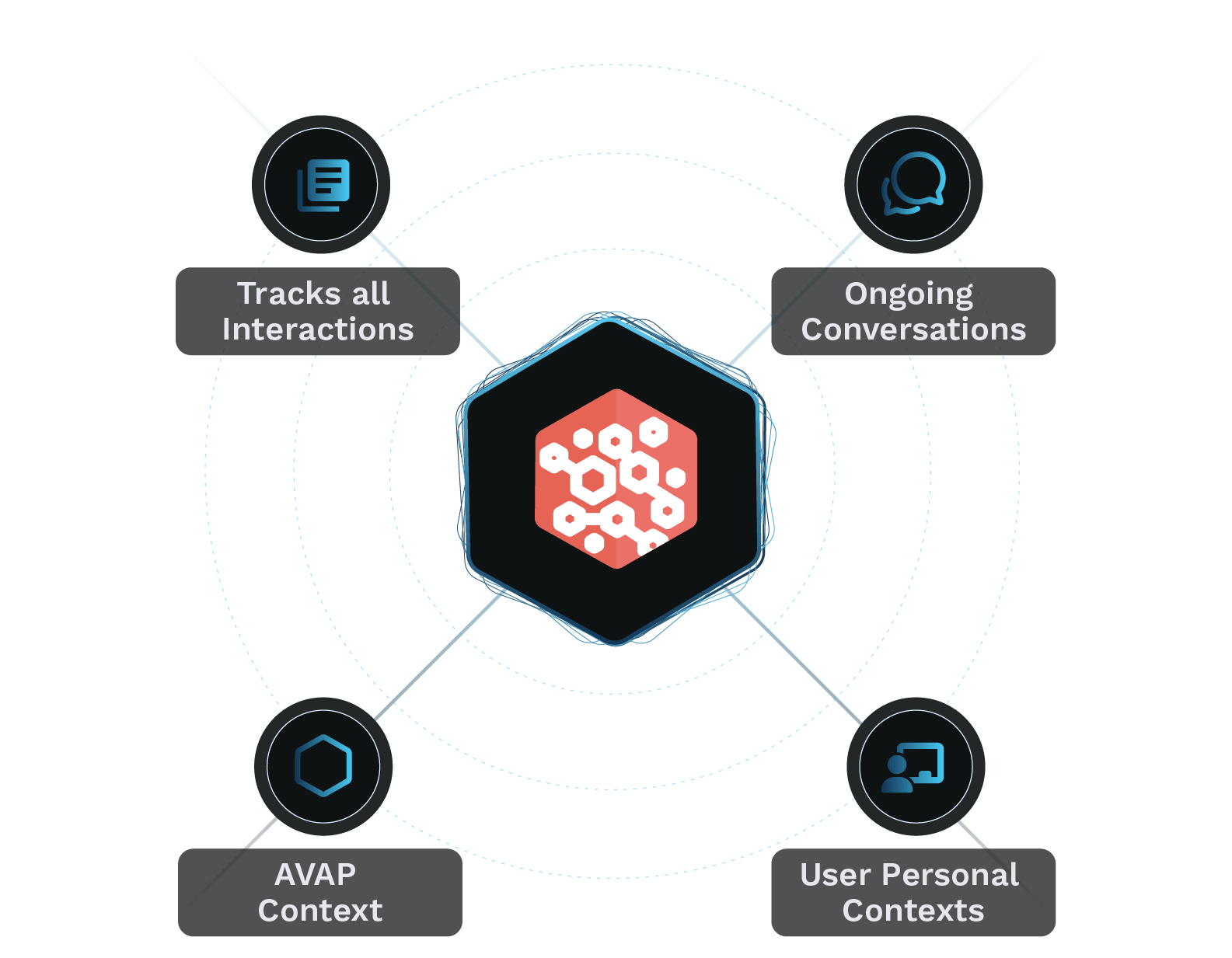

Contextual Memory

Brunix maintains a rich, continuous memory of your entire development process. Unlike traditional AI systems, Brunix tracks all interactions and incorporates ongoing conversations, the specific AVAP context, and user-provided personal contexts. This allows Brunix to deliver highly relevant, tailored support that evolves with your project, providing more accurate suggestions and helping you troubleshoot more effectively.